The NHTSA Has Finally Exposed Musk's Lies About Tesla Safety

The April 25 report from the NHTSA's Office of Defects Investigation is devastating for Tesla and Musk.

Elon Musk has for years confected endless fantasies about the supposedly superior safety of Tesla cars, and about the immanence of those cars’ full self-driving capabilities. Last week, Musk’s world of make-believe had a full frontal collision with an extensive and careful investigation by the National Highway Traffic Safety Administration (the NHTSA).

The collision casualties include:

The claims of Musk and Tesla about Tesla safety, which were exposed as brazen lies.

The claims of Musk and Tesla about Tesla’s autonomous driving capabilities, which were further undermined.

Tesla’s reputation for honest reporting, which is now in tatters.

The likely beneficiaries of the collision include:

Personal injury attorneys, who can use the devastating NHTSA findings to support their claims that flaws in Tesla’s autonomous driving systems caused their clients’ injuries or deaths.

Class action attorneys, who can use those same findings to urge that in making their safety claims, both Tesla and Musk committed securities fraud.

Tesla customers who want back their share of the hundreds of millions of dollars they paid for the deeply flawed Enhanced Autopilot and Full Self-Driving (FSD) features.

(from the Warrenton, Virginia crash, discussed in this post)

A. The Office of Defects Investigation (ODI)

The NHTSA is a small part of the U.S. Department of Transportation, with only 750 or so employees, but with a big responsibility: investigating alleged safety-related defects in motor vehicles and motor vehicle equipment. An excellent (albeit dated) discussion of the NHTSA’s responsibilities and powers can be found here, along with a discussion of some of the key cases arising under the National Highway Traffic and Motor Vehicle Safety Act (which I will refer to as the Safety Act, and which was originally enacted in 1966 and then re-codified in 1994).

An important part of the NHTSA is its Office of Defects Investigation (ODI), which as of November 2020 had approximately 90 employees. The NHTSA has described its mission, and ODI’s role in that mission, as follows:

The mission of the National Highway Traffic Safety Administration is to save lives, prevent injuries, and reduce economic costs due to road traffic crashes, through education, research, safety standards, and enforcement activity. In furtherance of this mission, the National Traffic and Motor Vehicle Safety Act (“Safety Act”), as amended, authorizes NHTSA to investigate issues relating to motor vehicle safety. The act also requires manufacturers to notify NHTSA of all safety-related defects involving unreasonable risk of accident, death, or injury and to execute recall campaigns providing vehicle and equipment owners with free repair or other remedy. The Office of Defects Investigation (ODI) at NHTSA plays a key role in executing this mission by gathering and analyzing relevant information, investigating potential defects, identifying unsafe motor vehicles and items of motor vehicle equipment, and managing the recall process.

The NHTSA publication linked to above includes a detailed explanation of the ODI’s data collection, data review, investigation, and recall management processes.

B. Tesla’s Autopilot Goes Under the ODI’s Microscope

Of key interest here is the investigation process. The ODI at first instituted a so-called Preliminary Evaluation (PE) of Tesla’s Autopilot in August of 2021. It described the PE’s origin and development as follows:

The investigation opening was motivated by an accumulation of crashes in which Tesla vehicles, operating with Autopilot engaged, struck stationary in-road or roadside frst responder vehicles tending to preexisting collision scenes. Upon opening the investigation, NHTSA indicated that the PE would also evaluate additional similar circumstance crashes of Tesla vehicles operating with Autopilot engaged, as well as assess the technologies and methods used to monitor, assist, and enforce the driver’s engagement with the dynamic driving task during Autopilot operation.

In June of 2022, the PE was upgraded to a so-called Engineering Analysis (EA). As the ODI then explained, it did so to gather additional data and perform additional testing “to explore the degree to which Autopilot and associated Tesla systems may exacerbate human factors or behavioral safety risks by undermining the effectiveness of the driver’s supervision.”

Last Thursday, April 25, the ODI published a six-page report detailing the impressive amount of information it had collected and the status of its investigation. The ODI noted that Autopilot is merely a Level 2 driver assistance system, and that based on earlier discussions with Tesla, Tesla had agreed that in certain circumstances, Autopilot’s system controls might be insufficient to assure constant supervision by a human driver, with the result of “avoidable crashes” had the driver been more attentive.

As the ODI noted, Tesla instituted a recall last December to address the ODI’s concerns. Tesla handled that recall not with any hardware changes, but entirely by means of over-the-air (OTA) software updates.

C. Were the OTA Updates Sufficient?

Some Tesla enthusiasts (or, more accurately, Tesla shareholders) have urged that the April 25 “Additional Information” report from the ODI is bullish for Tesla because it indicates that the NHTSA has now concluded its work, and in light of last December’s OTA update, all is well:

Wishful thinking, but wrong. The latest ODI update is poisonous for Tesla for at least four reasons:

it details numerous defects in Tesla’s autonomous driving systems that have already caused serious (and preventable) accidents;

it exposes enormous shortcomings in Tesla’s collection of data from collisions;

it suggests that Tesla’s Autopilot - far from leading to safer driving than the national average - may result in a crash rate of about double the national average; and

it suggests that far from being concluded, the investigation continues (and, gentle readers, when such an investigation continues, it historically does not bode well for the target of the investigation).

1. Accidents in which Autopilot Was a Contributing Cause

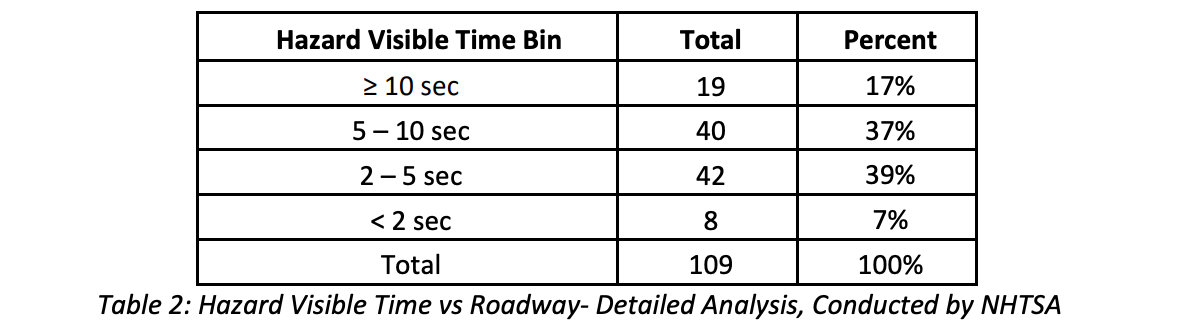

The ODI concluded that, before the OTA update, “Autopilot’s design was not sufficient to maintain drivers’ engagement.” It noted that in 109 out of 143 crashes from its detailed analysis, an engaged driver would have seen the hazard and likely avoided it:

The ODI detailed a fatal July 2023 crash in which it noted that had the driver been attentive (that is, not lulled by the promise of “Autopilot”), the driver would still be alive. The ODI also included this description:

Quite obviously, these findings will be highly useful to personal injury attorneys pursuing injury and death claims against Tesla. Consider this:

Sure, Tesla will continue to point to its fine print cautions that drivers should remain fully engaged. But, lots of luck with that. What the NHTSA has just explained, with powerful data, is how Autopilot by its very nature decreases driver engagement (or, at the very least, did so until the December 2023 OTA update).

2. Tesla Collects Data for a Small Percentage of Crashes

The ODI update indicates that Tesla files a safety report with the NHTSA only when there has been “an airbag or other active restraint deployed.” Yet, it notes, airbags are deployed in only 18% of police-reported crashes. It’s possible that the percentage of Tesla crashes reported by Tesla is higher than 18% because, besides airbags, other active restraints are seat-belt pre-tensioners and the pedestrian impact mitigation feature of the vehicle hood. But it’s doubtful that the percentage is much higher.

Consequently, the ODI noted that its “review uncovered crashes for which Autopilot was engaged that Tesla was not notified of via telematics.”

3. Tesla Cars Appear to Have an Abnormally High Number of Accidents

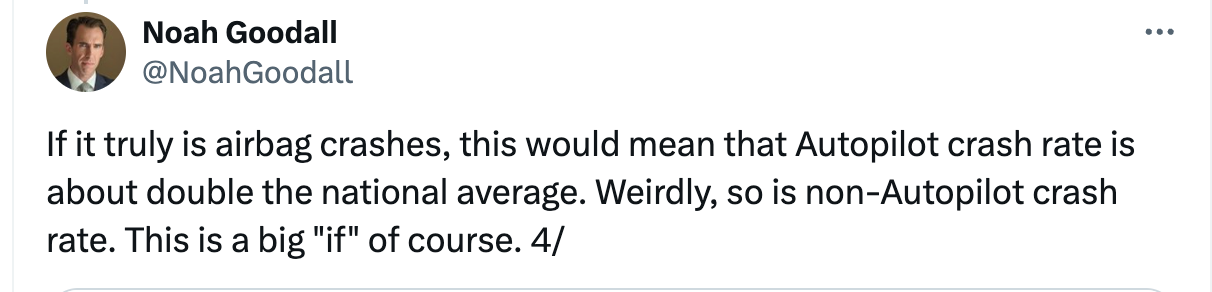

One of the most qualified, careful, and understated researchers of vehicle safety is Noah Goodall of Cornell University. You can here find some of his recent work. His thread on X (Twitter) regarding the ODI’s April 25 report is well worth reading. Among other things, Goodall noted the ODI’s finding that Tesla telemetrics reported only about 18% of the crashes in which Tesla vehicles were involved, and wrote this (chart omitted):

Yes, a big “if.” And yet… (again, chart omitted):

Obviously, more investigation and analysis are needed. But pending such investigation and analysis, for Elon Musk to continue crowing about how much safer Tesla’s Autopilot is relative to a human driver is reckless and dangerous, and he now runs a real risk of being held accountable for such lies by investors who bought Tesla cars, or Tesla stock, in reliance on what appear to be brazen lies by someone who knew, or should have known, better.

And, I might note, the data at the invaluable Tesla Deaths website (collected by X’s @icapulet is, to say the least, alarming.

4. The ODI Is Not Finished with Tesla

So, per TeslaBoomerPapa, is the NHTSA done? Is Tesla now in the clear?

Nothing in the “Additional Information” report so suggests. Indeed, it is difficult for me to understand how anyone reading the final three paragraphs of the report can so conclude:

If I had to guess, my guess would be that if Tesla continues to use the term “Autopilot,” then Tesla and the NHTSA will find themselves in a courtroom soon. And, I might note, I have been able to locate only one instance in which the NHTSA has lost (in contrast to many where it has prevailed ) when it has taken an automaker to court. Automakers these days know better.

And, despite what you read from TeslaBoomerPapa, and despite how much Elon Musk struggles to say otherwise, Tesla is an automaker. Not an AI company. Not a robotics company. Not an energy company. But an automaker.

Very good write up of a real sea change moment. One peripheral effect is that any possibility of licensing this fake autonomy technology to any other automaker - never very likely anyway - is now erased.

The findings by NHTSA on autopilot monitoring is very similar to NTSB's study a very long time ago. It's a shame the two organizations don't work well together.